🐰Happy Easter Day🐰Shop now with up to 30% off sitewide.

Menu

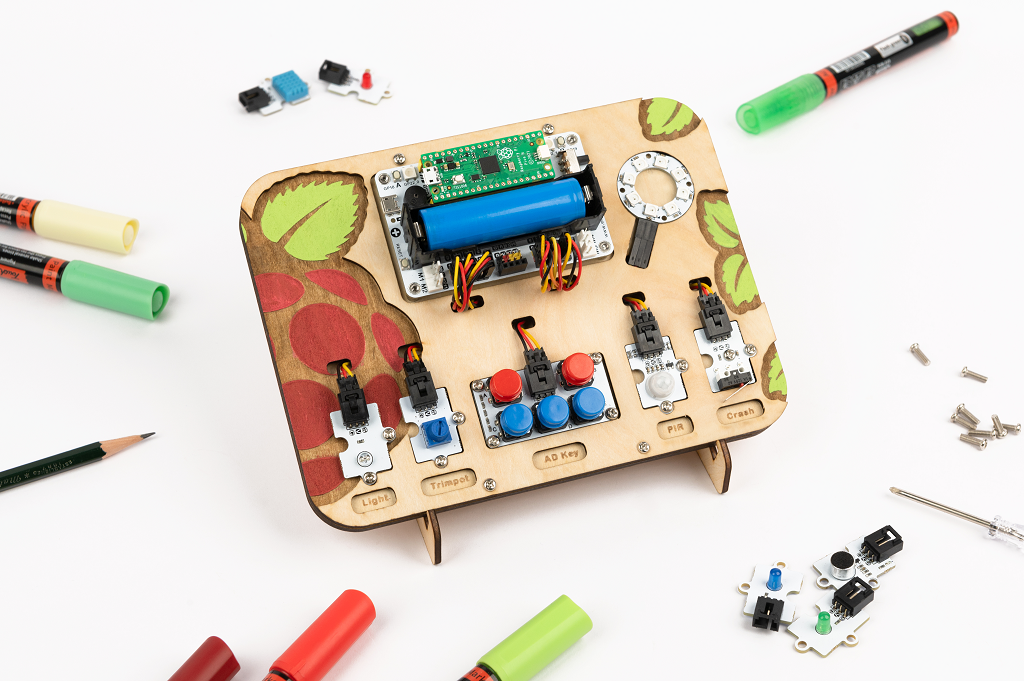

This is about the sensor of RP2040:

https://docs.edgeimpulse.com/docs/tutorials/end-to-end-tutorials/sensor-fusion

Neural networks are not limited to processing one type of data at a time. One of their biggest advantages is that they can be very flexible with input data types as long as the format and order of the data remain unchanged from training to inference. Therefore, we can use them to perform sensor fusion for various tasks.

Sensor fusion is the process of combining data from different types of sensors or similar sensors installed in different locations, which gives us more information to make decisions and classify. For example, you can combine temperature data with accelerometer data to better understand potential anomalies!

In this tutorial, you will learn how to use Edge Impulse to perform sensor fusion on wukong2040.

For this tutorial, you need a supported device, namely wukong2040.

In this demo, we’ll show you how to identify different environments by fusing temperature, humidity, pressure, and light data. In particular, I would have the Arduino board identify different rooms in my home and outside. Note that we’re assuming the environment is static – if I turn off the lights or the outside temperature changes, the model won’t work. However, it demonstrates how we can combine different sensor data with machine learning for classification!

Since we will be collecting data from the wukong2040 connected to a computer, it would be helpful to have a laptop that can be moved to different rooms.

Create a new project on Edge Impulse Studio. Connect wukong2040 to your computer. Upload the Edge Impulse firmware to the board and connect it to your project.

Go to data collection. Under “Record new data,” select your device and set the label to “Bedroom.” Change Sensors to Environment + Interaction, Sampling Length to 10000 milliseconds, and Frequency to 12.5Hz.

Stand in one of your rooms with an Arduino board (and laptop). Click Start Sampling and slowly move the board while collecting data. Once sampling is complete, you should see a new data plot with a different line for each sensor.

Repeat this process to record approximately 3 minutes of bedroom-type data. When collecting data, try standing in different locations around the room—we want a robust data set that represents the characteristics of each room. Go to another room and repeat the data collection. Continue doing this until you have approximately 3 minutes of data for each of the following categories: Bedroom, Hallway, Outside.

Try other rooms or locations. In this demo, I found that my bedroom, kitchen, and living room all exhibited similar ambient and lighting characteristics, making it difficult for the model to differentiate between them.

Pulse is a combination of preprocessing (DSP) modules and machine learning modules. It cuts our data into smaller windows, uses signal processing to extract features, and then trains a machine-learning model. Because we are working with environment and light data, which are slow-moving averages, we will use a flattened block for preprocessing.

Go to Create Impulse. Change the window increase to 500 milliseconds. Add flattened block. Note that you can select which environmental and interaction sensor data to include. Deselect Proximity and Gestures as we don’t need them to detect the room. Add Classification (Keras) Learning Block Click Save Pulse.

Go to Flatten. You can select different samples and move the window to view the DSP results for each set of features to be sent to the learning module.

The Flatten module will calculate the mean, minimum, maximum, root mean square, standard deviation, skewness, and kurtosis for each axis (e.g. temperature, humidity, brightness, etc.). Computing 7 features through 7 axes and each axis gives us 49 features for each window sent to the learning block. You can view these calculated features under Processed Features.

Click Save Parameters. On the next screen, select Calculate feature importance and click Generate features.

After a while, you should be able to browse the features of your dataset to see if your classes can be easily separated into categories.

By collecting a dataset and processing features, we can train a machine learning model. Click NN Classifier. Change the number of training cycles to 300 and click Start Training. In this demonstration, we will keep the neural network architecture as the default.

During training, the parameters in the neurons of the neural network are gradually updated so that the model will try to guess the category of each set of data as accurately as possible. Once training is complete, you should see the Model panel on the right side of the page.

A confusion matrix gives you an idea of how well your model performs when classifying different data sets. The top row gives the predicted labels and the left column gives the actual (ground truth) labels. Ideally, the model should predict the class correctly, but this is not always the case. You want the diagonal cells from top left to bottom right to be as close to 100% as possible.

If you find a lot of confusion between classes, it means you need to collect more data, try different features, use a different model architecture, or train for longer (more epochs). Check out this guide to learn ways to improve model performance.

Device-side performance provides some statistics about how the model is likely to run on a specific device. By default, an Arm Cortex-M80F running at 4 MHz is assumed to be your target device. Actual memory requirements and runtime may vary by platform.

Inference can be run on test datasets and live data, rather than simply assuming that our model will work after deployment.

First, go to Model Testing and click All Classifications. After a few moments, you should see the results of the test set.

You can click the three dots next to an item and select Show Categories. This will give you a sorted results screen where you can view the results information in more detail. View detailed classification results of test samples.

Additionally, we can test the pulses in real-world environments to ensure the model is not overfitting the training data. To do this, go to the live categories. Make sure your device is connected to Studio and that the sensor, sample length, and frequency match the frequency we originally captured the data.

Click Start Sampling. A new sample will be captured, uploaded, and classified from your board. Once completed, you should see the classification results.

In the example above, we sampled 10 seconds of data from the Arduino. This data is split into 1-second windows (the window moves over 0.5 seconds each time) and the data in this window is sent to the DSP module. The DSP module computes 49 features and sends them to the trained machine learning model, which performs a forward pass to provide us with the inference results.

As you can see, the corollary result for all the windows claims that wukong2040 is in the bedroom, which is true! This is great news for our model – it seems to work even on unseen data.

Now that we have an impulse on the trained model and have tested its functionality, we can deploy the model back to our device. This means Pulse can be run locally without an internet connection to perform inference!

Edge Impulse packages the entire impulse (preprocessing modules, neural network, and classification code) into a library that you can include in your embedded software.

Click Deployment in the menu. Select the library you want to create and click Generate at the bottom of the page.

Well done! You have trained a neural network that determines the location of your development board based on the fusion of multiple sensors working together. Note that this demonstration is quite limited – as daylight or temperature changes, the model will no longer be valid. But hopefully, it gives you some ideas on how to mix and match sensors to achieve your machine learning goals.

If you’re interested in more, check out our tutorials on identifying sounds in audio or adding sight lines to your sensors. If you have a good idea for a different project, that’s okay too. Edge Impulse lets you capture data from any sensor, build custom processing blocks to extract features and use learning blocks with full flexibility in your machine-learning pipeline.

Stay up-to-date with our latest promotions,discounts,sales,and special offers.